Logistic Regression

Logistic Regression

- we transformed the linear combination

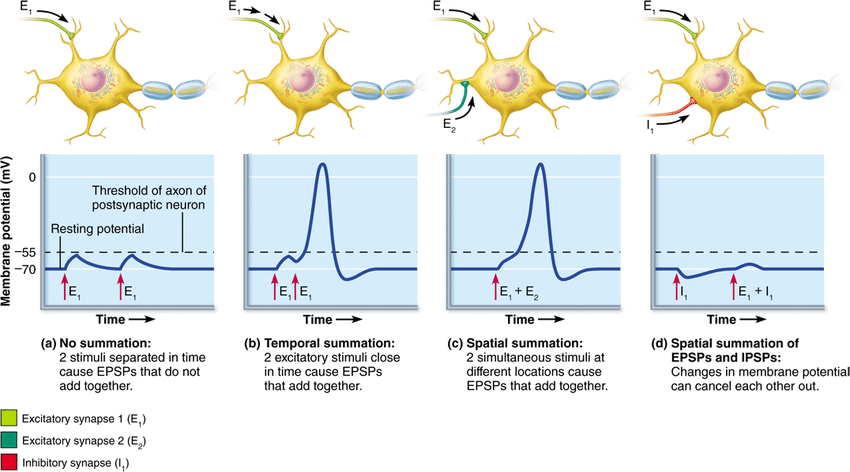

- in ANN we call this function activation

- in the brain neurons only fire, if the activation

of the input neurons pass a certain threshold

Activation Function

- in ANN this behavior is modeled using different functions

Perceptron

Perceptron

an artificial neuron using a step function as the activation function

- linear classifier

- not very powerful

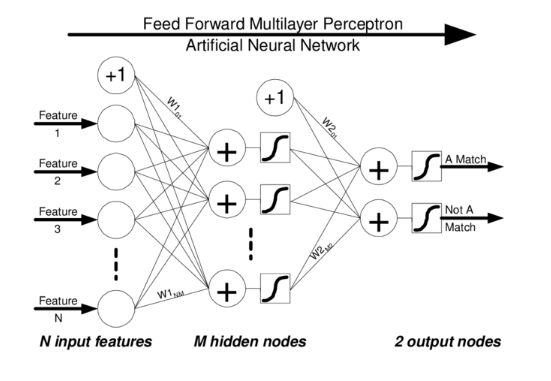

Multi-layer Perceptron

Multi-layer Perceptron

- each green and red dot is a perceptron (think neuron)

- each connection is associated with a weight (

- Input Layer: placeholder for the input values (

- Output Layer: perceptron for the predicted variable

- Hidden Layers: more interconnected perceptrons (manny layers = deep learning)

https://machinelearninggeek.com/multi-layer-perceptron-neural-network-using-python/

More detailed view

- the activation of each neuron depends on the activation of the neurons that come before (feed-forward network)

https://www.stateoftheart.ai/concepts/f83ec537-a447-4727-b1ff-e6dff4363c14

Hyper-Parameters for MLPs

Hyper-Parameters for MLPs

- Architecture of the Network

- Choice of activation functions

- Number of hidden layers

- Number of nodes in hidden layers

- Connectedness of hidden layers (dropout)

- In- and Outputs

- Feature selection

- Representation oft the In- and Outputs (Encoding, Scaling, etc.)

- Hyper-Parameters

- Training algorithm

- Learning rate

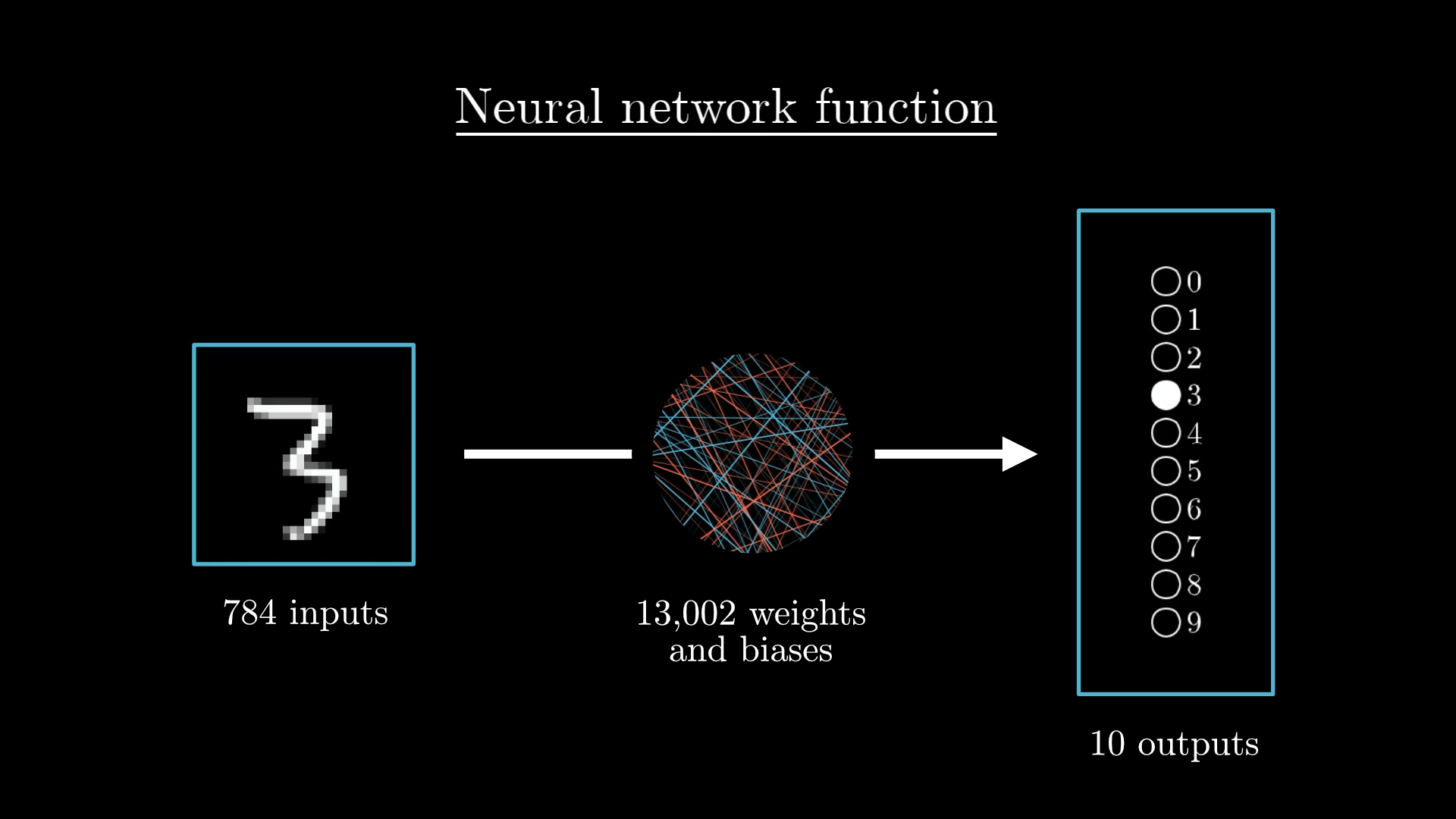

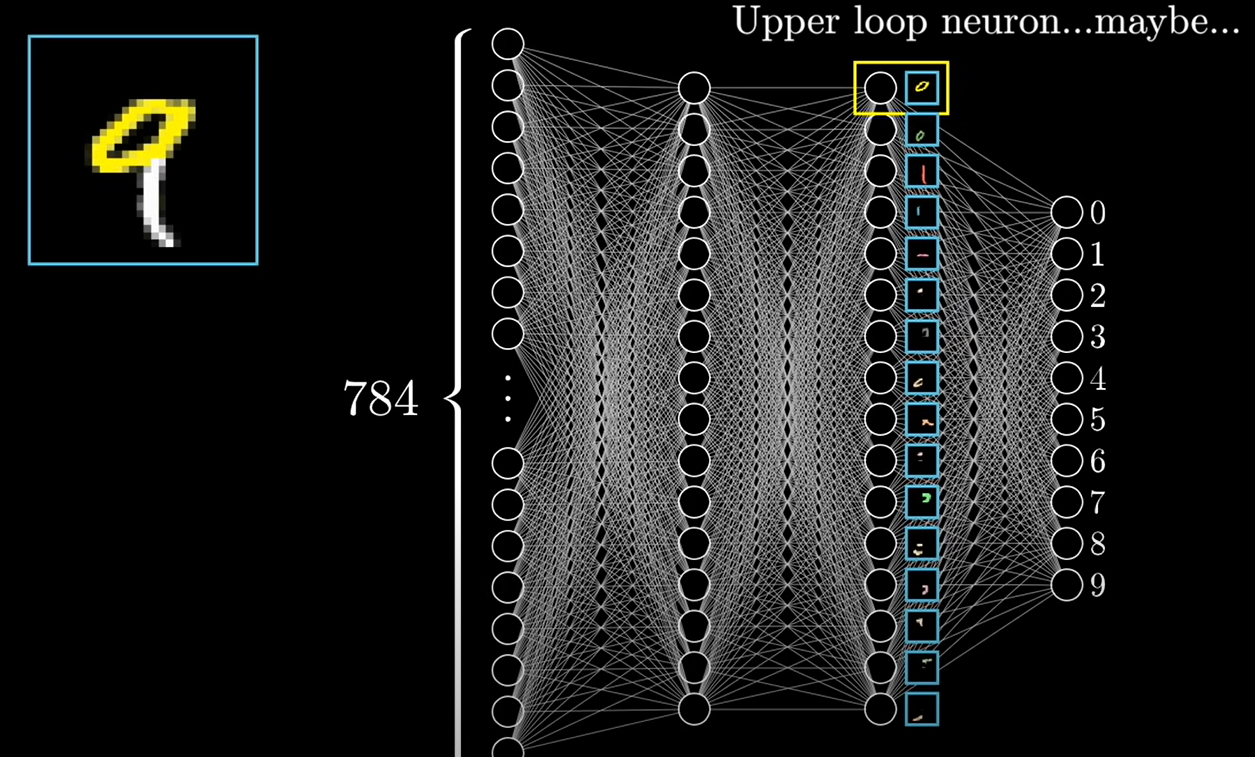

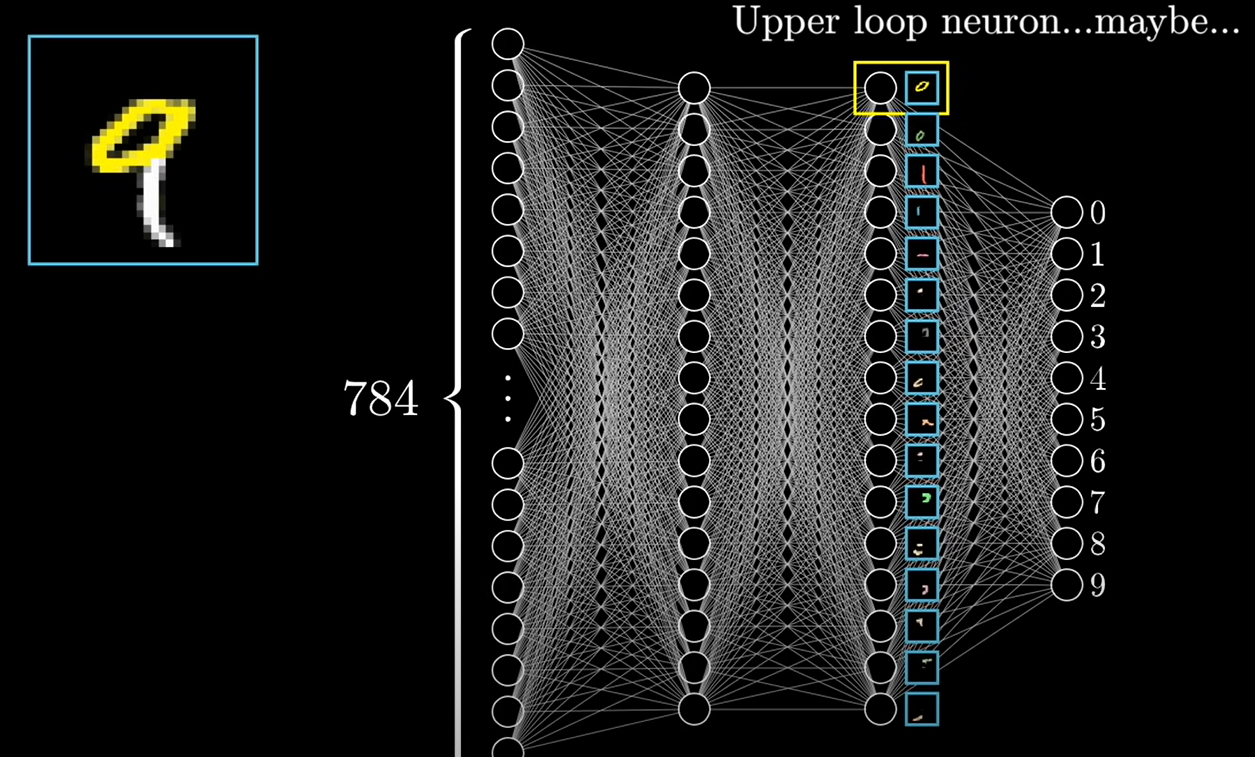

Example

- 10 nodes in the output-layer (one for each class)

- two hidden layers, fully connected

- activation function in the output-layer should represent the probability of the class

- What are the features in the input layer?

https://www.3blue1brown.com/lessons/neural-networks

![]()

- grey-scale values of

- normalized

https://www.3blue1brown.com/lessons/neural-networks

- Pixel values in 8-Bit grey-scale

- Normalized pixel values between 0 and 1

- Flattened input vector and notation for input layer

What is the meaning of the output layer?

- Depending on the activation function of the output layer

- a vector with values between 0 and 1, that indicate the class

- Output layer shows high confidence in the

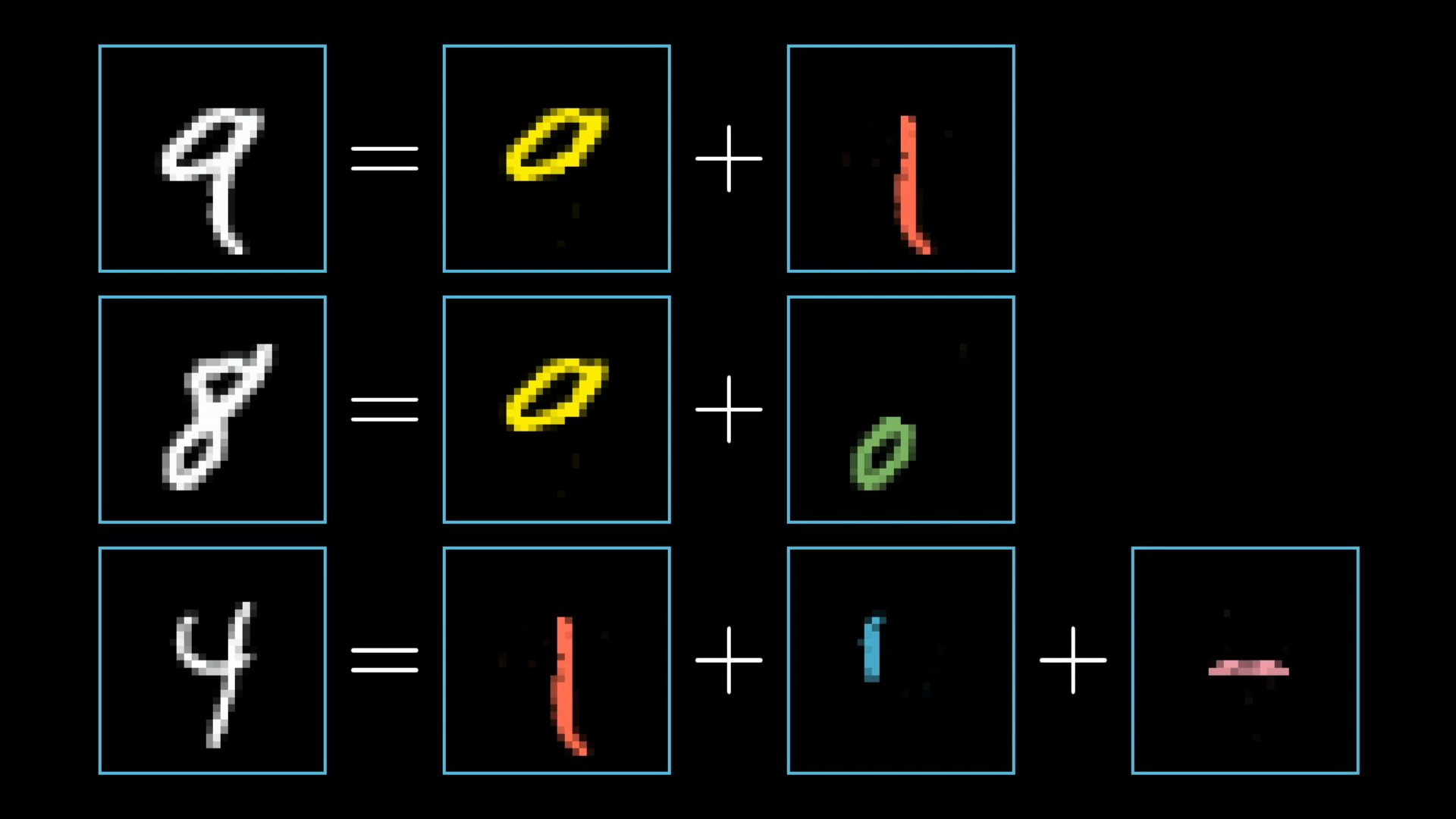

Neural Networks as a Back Box

- What happens in the hidden layers?

- this is hard to say for humans as the hundreds of weights are hard to interpret

- as a model, the problem is deconstructed in subproblems

- something like this happens in deeper, more complicated networks

- even simple networks, as in this example are not interpretable for humans

- given the following input layer

- the first hidden layer has two nodes

- write out the matrix multiplication to get

the values - assume all weights to be 1

Hint: if You are not sure about the dimensions of the matrix, start by sketching the network first

Training ANNs

Training ANNs

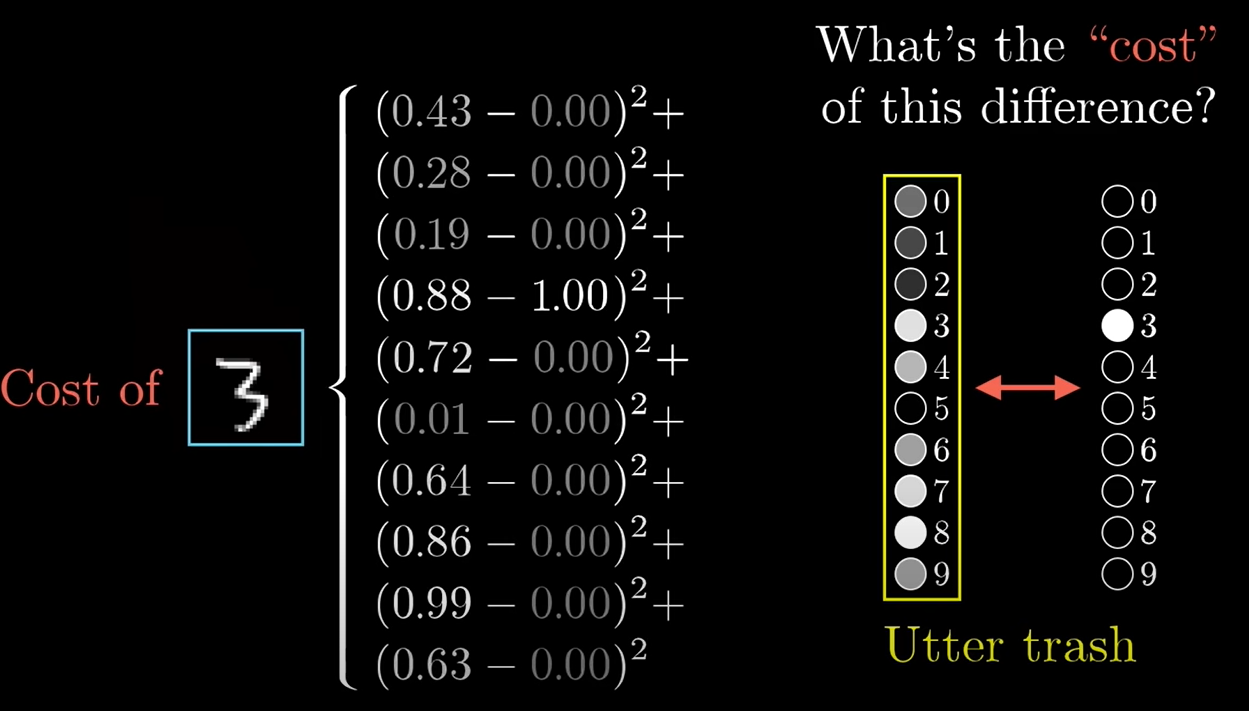

- If the have the results of the prediction (

- and calculate a cost function

Cost function

Cost function

- the first thing that is new, is that we now have a vector of

multiple predictions - we can still calculate a singe cost value (scalar)

e.g. by summing up all squared errors - for a single observation

the error we make depends on the data (- for the single observation

- We can apply gradient descent again

Backpropagation

- However, we have a large network

- so we would have to calculate the partial derivative for a

- very complicated function

- the solution: Start at the end and adjust the weight layer by layer

Great video: https://www.youtube.com/watch?v=Ilg3gGewQ5U

MLP in practical application

MLP in practical application

- an MLP with a single hidden layer can approximate almost any given function

- A MLP with a few layers is similar in perfomance to a tree-based model

- by the large number of hyper parameters

- and weight they can be hard to train

- learning curves are important

ANN with sklearn

ANN with sklearn

clf = MLPClassifier(hidden_layer_size = [30,30], # two hidden layers with 30 nodes each

activation ="logistic" ,

solver = "sgd", # stochastic gradient descent

learning_rate = 0.1,

max_iter=300).fit(X_train, y_train)

ANN with keras and tesorflow

# define the keras model

model = Sequential()

model.add(Dense(12, input_shape=(8,), activation='relu'))

model.add(Dense(8, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

Deep Learning

- Modern Architectures have hundreds of specialized layers

- Convolutional neural networks - images

- Long short-term memory networks - speech, games

- Transformers - chat-bots, translation

- Generative Adversarial Networks for unsupervised learning

Deep Learning in practical application

- Framework like

fastaimake it easy to use pre-trained model without dealing with the details (tensorflow,pytorch,keras) - Training large model on high resolution data is expensive and works more efficient with GPUs (graphical processing units) and specialized drivers