Model Evaluation / Prediction

- Evaluate the models on a unseen test-set

- Evaluate strength and weaknesses of the best models

2.5.2 Resampling Methods

Learning objectives

Learning objectives

You will be able to

- what questions to evaluate based on training, validation and test-sets

- perform LOO and k-fold cross-validation to create models that do not over-fit the training data

Model & Feature Selection

Model & Feature Selection

- we want to find the best model for the data

- models can differ in

- predictors / features that go into the model

- model type (linear regression, decision tree, etc...)

- models power to over-fit

- hyper parameters (parameters, that tweak the model)

- first, we focus in the the predictors / features

Finding the best predictors / features

- We have possible predictor variables in the data set to predict

body_mass_g:species, island, bill_length_mm, bill_depth_mm, flipper_length_mm, sex

- Encoding the categorical variables binary we have possible predictors

- isAdelie, isGentoo , fromTorgersen, fromBiscoe, bill_length_mm, bill_depth_mm, flipper_length_mm, isFemale

- from that we can build possible linear models:

- ...

What we found

- It is hard to predict what is the best model in advance

- We compared models in different ways

- Fit on the training set (, )

- Prediction accuracy

- on the training

- and test-set ()

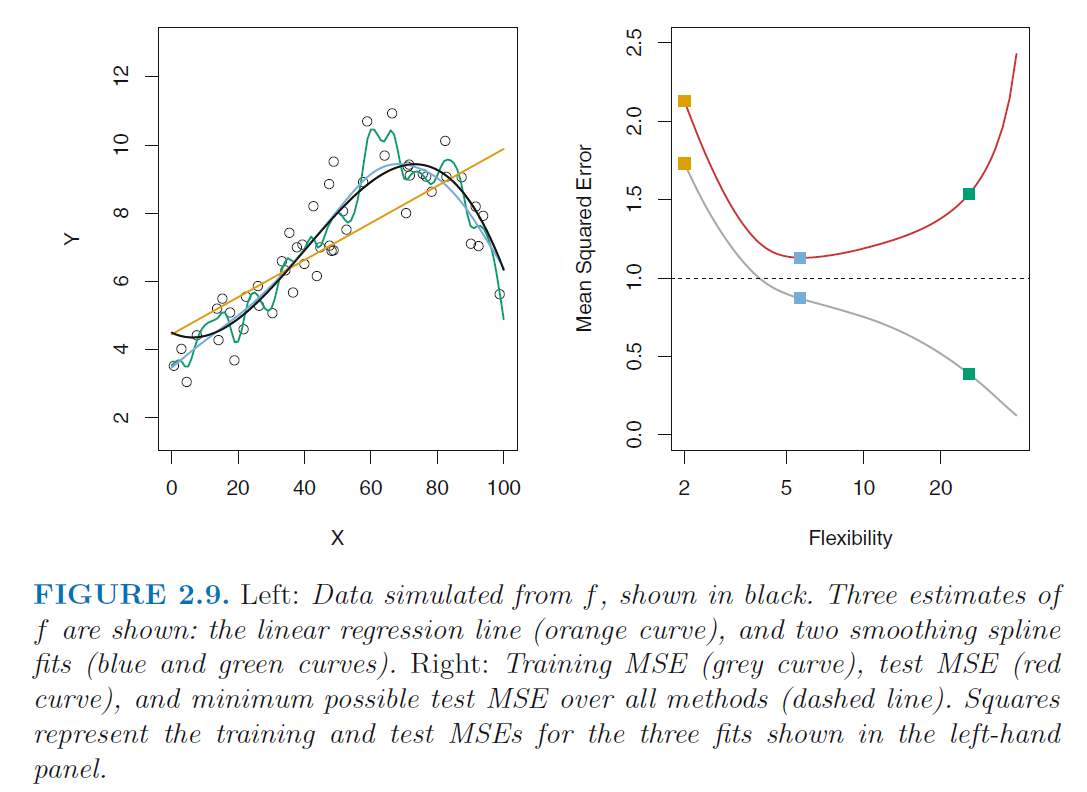

- Evaluation only on the training set can lead to over fitting

Over-fitting the Training Data

- What we really want to know: What will be the best model on new unseen data?

- We need to select models on a set that is not the training data

- After selecting a model, we still want to test how well it performs on unseen data

- validation-set

- A set on which we compare trained models to find

- the best features and ML Algorithms for the task

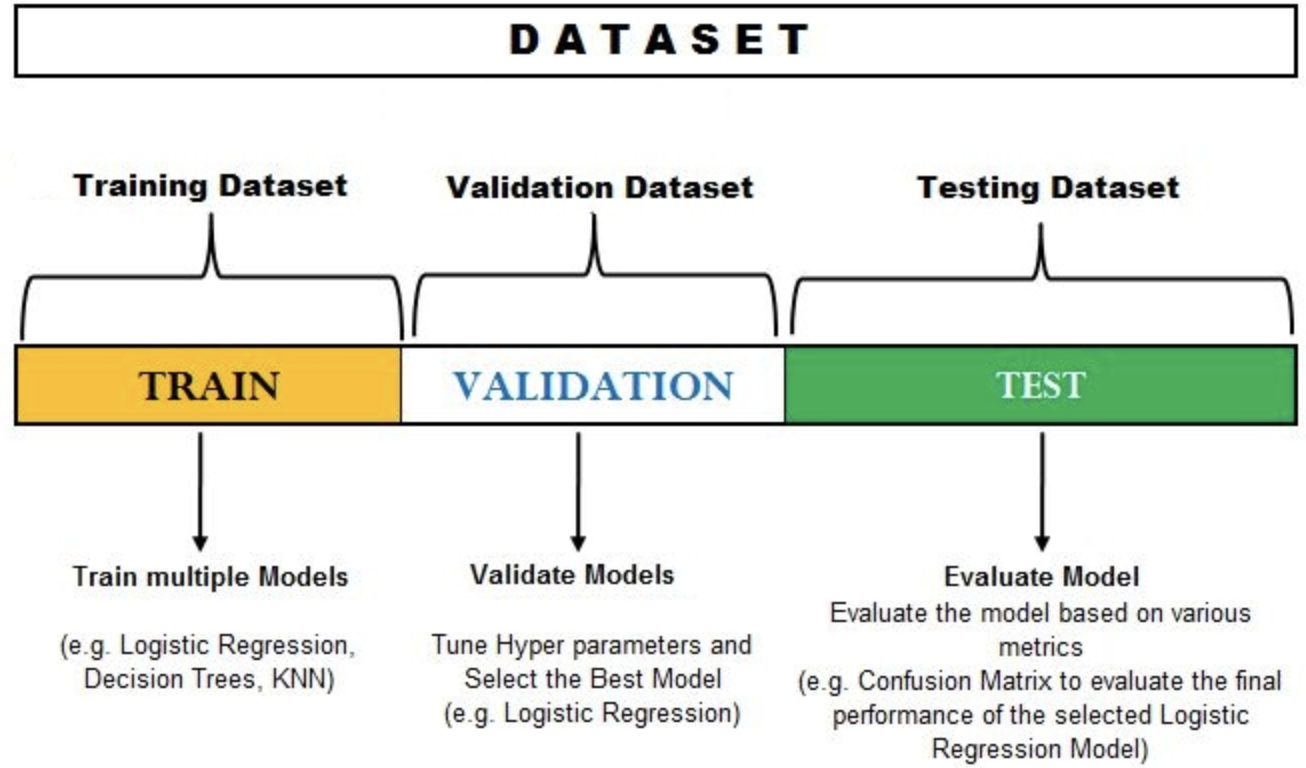

We must divide the data into three parts

We must divide the data into three parts

https://www.sxcco.com/?category_id=4128701

| Training-Set | Validation-Set: | Test-Set |

|---|---|---|

| for fitting the models | or selecting the models (e.g., which parameters to include) | hold-out set of data, to prove that we selected a good model for any data |

How do we split the data?

- Often, we do not have much data, but ...

- if we choose a

- small training set

- We only use a small proportion of the data to learn

- If we have powerful models on sparse data, they tend to over-fit

- small validation / test-set

- set can be very special by chance

- misleading results

https://www.sxcco.com/?category_id=4128701

The Validation-Set Approach

The Validation-Set Approach

- split the data once

- / / are common proportions

data = [3, 7, 13, 15, 22, 25, 50, 91] training = [3, 7, 13, 50] validation = [15, 91] test = [22, 25]

- note, that we can shuffle the folds randomly - train is not the first three!

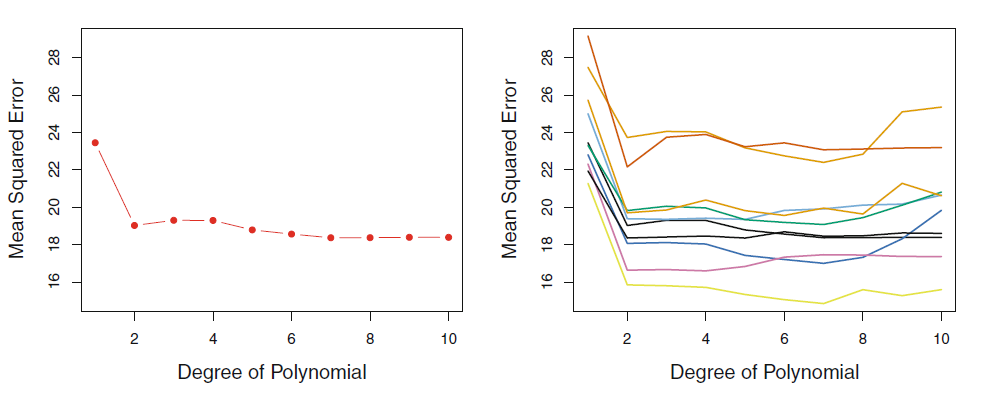

Problems with the Validation-Set Approach

- test-set vs polynomial complexity of the model

- Left: test-set one split, Right: test-set with ten different splits

- Results depend on how we split the data

Leave-One-Out Cross-Validation (LOO)

Leave-One-Out Cross-Validation (LOO)

- only put one observation in the test or validation-set

- repeat this for all observations

data = [3, 7, 13, 15, 22, 25, 50, 91]

test = [22, 25]

training_1 = [7, 13, 15, 50, 91]

validation_1 = [3]

training_2 = [3, 13, 15, 50, 91]

validation_2 = [7]

...

Averaging the Cross-Validation results

Averaging the Cross-Validation results

data = [3, 7, 13, 15, 22, 25, 50, 91]

- With observations in the original data set

- We get models with different prediction errors on the validation-sets

- We can still calculate the the same way

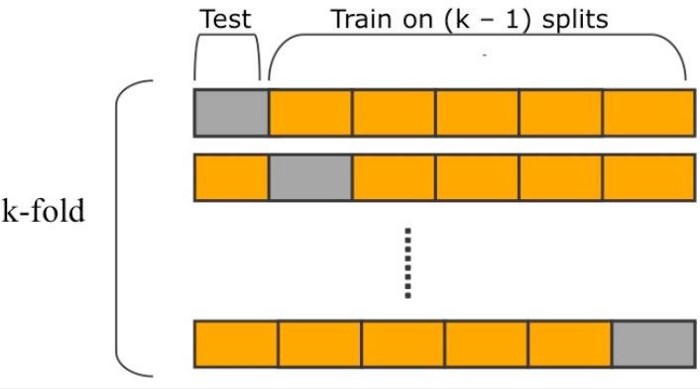

k-fold Cross-Validation

k-fold Cross-Validation

- instead of folds with length , the data is split in folds of equal length

data = [3, 7, 13, 15, 22, 25, 50, 91]

test = [22, 25]

training_fold_1 = [13, 15, 50, 91]

validation_fold_1 = [3, 7]

training_fold_2 = [3, 7, 50, 91]

validation_fold_2 = [13, 15]

...

https://medium.com/coders-mojo/quick-recap-most-important-projects-data-science-machine-learning-programming-tricks-and-c7d99a7a2391

Averaging the Cross-Validation results

- With folds

- we get different s

What to use?

- Leave-One-Out (LOO) is a special case of n-folds ()

- LOO is computationally more expensive

- LOO has a smaller bias (we use more data)

- LOO has a higher variance (the models we train are all

very similar, while the test-set very different) using kFolds with or has been shown empirically

to yield good results

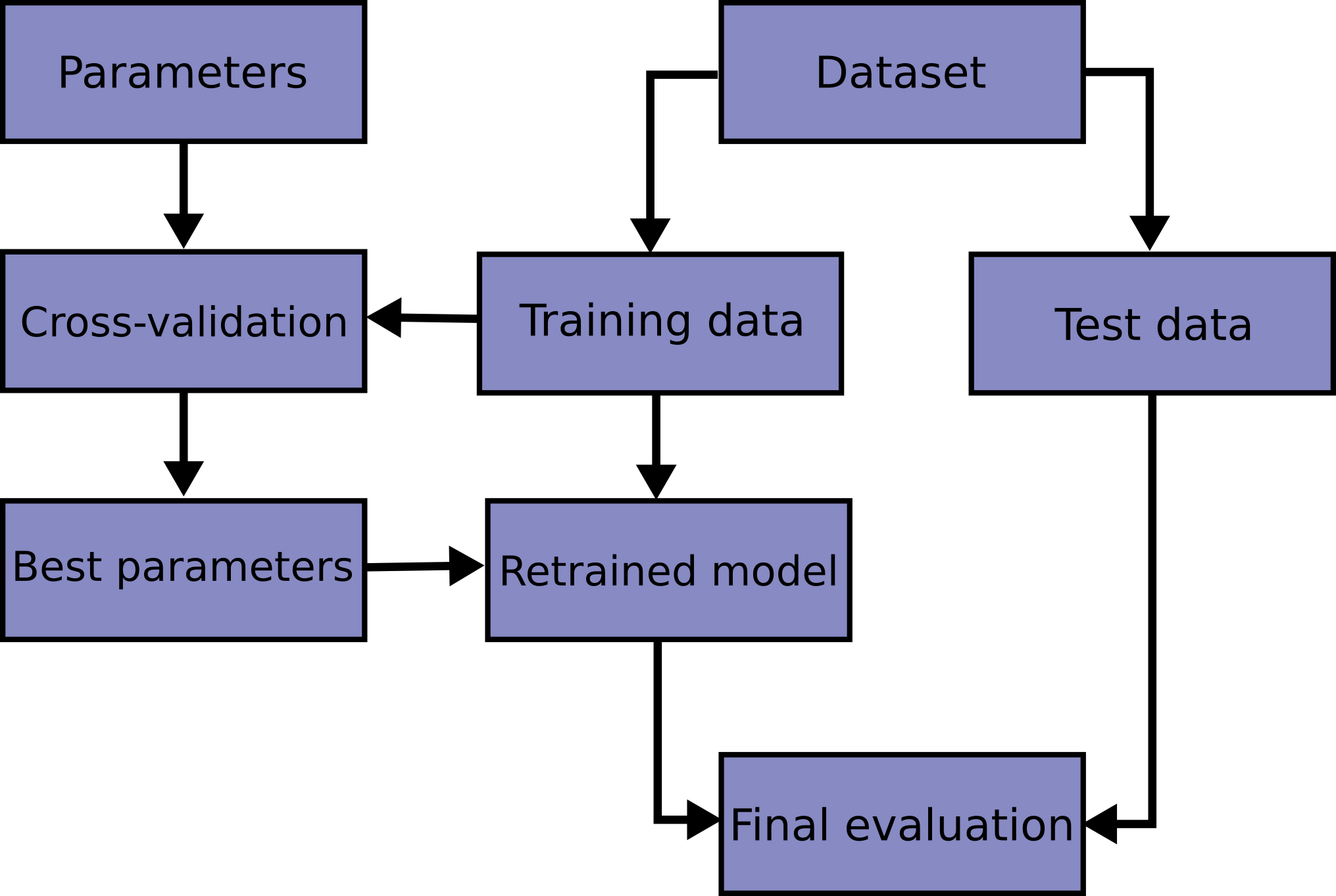

A practical approach to Cross-Validation

A practical approach to Cross-Validation

https://scikit-learn.org/stable/modules/cross_validation.html

| Training-Set | Validation-Set | Test-Set |

|---|---|---|

| used to train the models | used to select the model, predictors and/or parameters | used to prove the models performance |

| 5-fold CV (with Validation) | 5-fold CV (with Training) | cut out at the beginning |

- note that the data should be shuffled before the split

- there are some cases, where this will not work (e.g. temporal data)

2.5.3 Feature Selection

- given we have possible linear models:

- ...

- how can we reliably find the best one?

- we use 5-fold cross-validation to find the best features (predictors) and parameters

Option 1: Best Subset Selection

- brute force: train all possible models on the training set

- compare the models performance on the validation-set

- select the best model (e.g, based on validation )

- With 5-fold cross-validation You will train different models

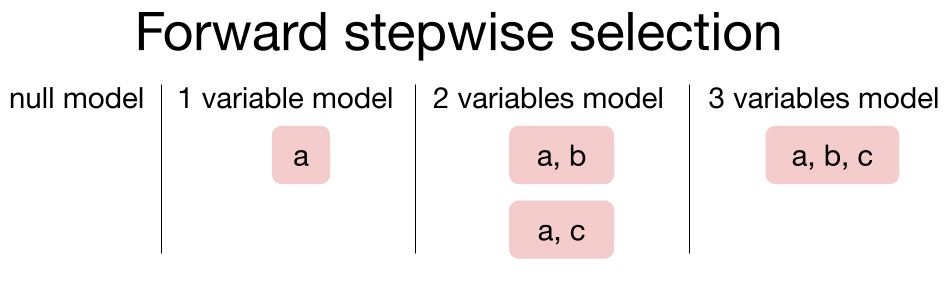

Option 2: Forward Selection

Option 2: Forward Selection

- start he the null model ()

- calculate the best model with only one predictor

- calculate the best model containing and one further predictor

- ...

https://medium.com/codex/what-are-three-approaches-for-variable-selection-and-when-to-use-which-54de12f32464

Option 3: Backward Selection

Option 3: Backward Selection

- inversion of the forward selection

- start with all predictors

- drop the predictor with the smallest decrease in model accuracy

More Options:

More Options:

- Automated Feature Selection

- Regularization can shrink unimportant parameters

Case Study

Case Study

Try to find the best possible linear model for predicting the penguin weight using

- best subset selection and

- cross-validation

https://www.scoopnest.com/user/AFP/1035147372572102656-do-you-know-your-gentoo-from-your-adelie-penguins-infographic-on-10-of-the-world39s-species-after

5.1 Cross-Validation and Model Selection - Resampling

40 minutes

Learning Summary

Learning Summary

Now, You can

- what questions to evaluate based on training, validation and test-sets

- perform LOO and k-fold cross-validation to create models that do not over-fit the training data